Before apps, algorithms, and cloud platforms came to define modern life, a soft-spoken American mathematician and engineer quietly reimagined communication from the ground up. He showed that messages—spoken words, pictures, music, numbers—can be treated as sequences of symbols, measured in discrete units, and transmitted reliably even through noisy channels. That insight gave engineers a new language, complete with limits, trade-offs, and guarantees, and it gave the digital age its grammar. This article traces the ideas, experiments, and habits of mind that transformed Claude Elwood Shannon from a tinkering Midwestern kid into the architect of the information era—and explains how his framework still shapes every network, storage system, and codec we use today.

In the pages that follow, we will move from childhood curiosities to Bell Labs breakthroughs; from Boolean circuits to capacity theorems; from wartime cryptography to playful inventions; and from telephony to neuroscience. Along the way, we will return, again and again, to a single unifying thread—Claude Shannon information theory—and see how a handful of clear principles can scale from toy mice to global internets, from juggling machines to cloud data centers, and from scratch-built relays to machine learning pipelines.

A Curious Child in Michigan: Gears, Morse Code, and Homemade Gadgets

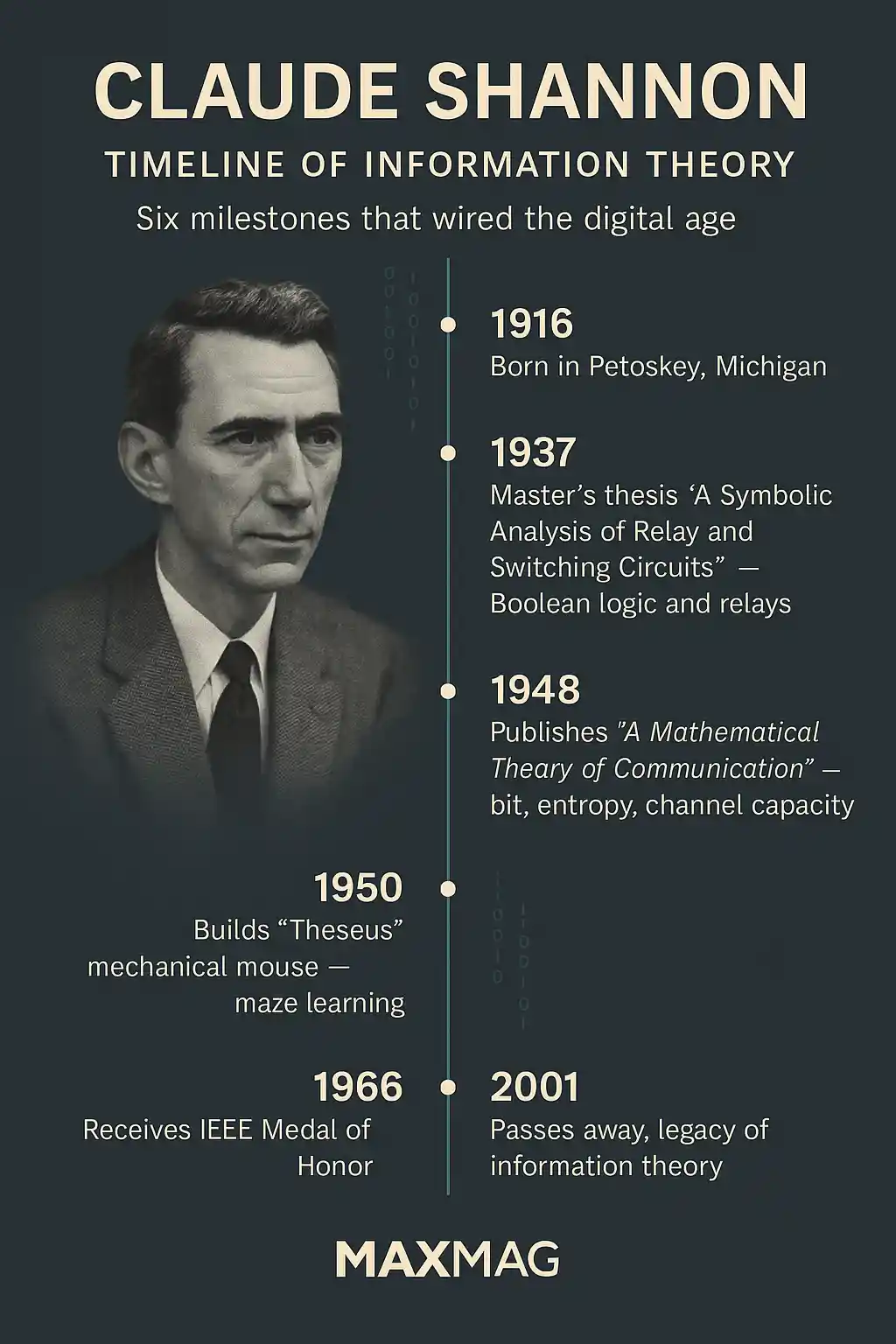

Claude Shannon was born in 1916 in the small town of Petoskey, Michigan, and grew up in nearby Gaylord. His childhood was powered by tools, wood scraps, surplus wire, and boundless curiosity. He strung a homemade telegraph between houses, repaired radios others discarded, and built gadgets that put ideas into motion. The do-it-yourself spirit of the era met a mind that loved patterns. From the start, he preferred to learn by making—cutting, soldering, and testing until something clicked. That habit of embodied thinking would become a lifelong signature, binding theory and device with unusual intimacy. In later decades, when his equations reshaped communication, he still approached problems as a builder. It is one reason Claude Shannon information theory speaks so fluently to practicing engineers: it grew from hands that knew how things work.

MIT and the Algebra of Circuits

Shannon studied both electrical engineering and mathematics, a dual fluency that would prove decisive. At the Massachusetts Institute of Technology he encountered Vannevar Bush’s differential analyzer, a room-sized analog computer that solved equations with wheels and shafts. Watching a machine “think” crystallized a daring idea: perhaps logic could be wired. In his master’s thesis, Shannon demonstrated that the on/off behavior of relays maps cleanly onto Boolean algebra. Circuits could therefore be designed, simplified, and proven correct using symbols rather than guesswork. That thesis effectively launched digital circuit theory, and it seeded a deeper intuition that would later flourish inside Claude Shannon information theory: abstract structure can be embodied in physical hardware, and hardware can be reasoned about with the precision of mathematics.

Claude Shannon information theory: From Uncertainty to Bit

In 1948 Shannon published “A Mathematical Theory of Communication,” a paper whose reach rivals that of thermodynamics or calculus in engineering practice. He defined a quantitative measure of uncertainty—entropy—and identified the bit as the natural unit of information. His theory reframed communication as a probabilistic game: a sender chooses one message from many possibilities; a channel corrupts symbols with noise; a receiver tries to infer what was sent. The central achievement of Claude Shannon information theory was to show that for any given channel there exists a maximum reliable data rate—its capacity—and that clever coding schemes can make error rates arbitrarily small for any rate below that limit.

Core Concepts of Claude Shannon information theory

Entropy measures unpredictability. A source that always emits the same symbol has zero entropy; a source with many equally likely symbols has high entropy. Compression exploits that structure, assigning short codewords to frequent symbols and longer ones to rare symbols, squeezing out redundancy without losing content. Channels inject errors; error-correcting codes add carefully structured redundancy back in so the receiver can detect and fix mistakes. The triumph of Claude Shannon information theory is to prove that these two processes—source coding and channel coding—can be separated without sacrificing optimality, a modularity that profoundly simplifies system design.

Capacity, Channels, and the Trade-Offs That Govern Digital Design

Once you quantify uncertainty and noise, you can compute a channel’s limits. Capacity rises with bandwidth and signal power and falls with noise. But even more important than any single formula is the habit of thinking in trade-offs. Need higher reliability? Spend more redundancy or accept lower rate. Need lower latency? Reduce block size and tolerate a little inefficiency. Need better coverage? Trade rate for power or bandwidth. This way of reasoning—clear, quantitative, and constraint-aware—became the default mental model of communications engineers, and its backbone is the discipline introduced by Claude Shannon information theory.

Claude Shannon information theory in Practice: Compression, Coding, Capacity

Every modern media format is a monument to these ideas. A photo can be compressed to a fraction of its original size without visible loss because images have statistical patterns—edges, textures, repeated colors—that a coder can exploit. Audio codecs trim sounds our ears are unlikely to perceive; video codecs predict frames from motion and texture. On the other end of the pipeline, channel codes add redundancy in geometric patterns that let receivers correct errors caused by fading, interference, or thermal noise. The discipline that unifies these steps is the same discipline that animates Claude Shannon information theory, and it is why streamed movies, video calls, and cloud games feel effortless even though the physics underneath are unforgiving.

Everyday Systems Built on Claude Shannon information theory

Picture a phone call in a busy city. Your voice is first compressed by a source coder tuned to human speech. The resulting bits are then wrapped by an error-correcting code; the radio adapts its modulation and coding rate as signal conditions change; packets are scheduled, acknowledged, and occasionally retransmitted; and the whole protocol stack is orchestrated to respect capacity limits. When the call “just works,” it is because layer after layer is honoring the guarantees proved inside Claude Shannon information theory, translating mathematical existence proofs into lived reliability.

Secrets and Signals: Cryptography in War and Peace

During World War II, Shannon analyzed secure communications and later published “Communication Theory of Secrecy Systems,” establishing the first rigorous foundation for cryptography as a branch of communication theory. He proved that a one-time pad, used correctly, yields perfect secrecy: the ciphertext reveals nothing about the plaintext. This was not a clever trick but a structural result, derived by analyzing uncertainty and correlation between variables. The same mathematics that quantifies information also quantifies ignorance, making it natural to see cryptography as another application of Claude Shannon information theory.

Play as Method: Mice, Juggling, and the Lab as a Toy Box

Shannon never stopped building. He designed a mechanical mouse that “learned” a maze; he assembled a juggling machine that adjusted its patterns; he rode a unicycle through Bell Labs hallways; and he built the famous “Ultimate Machine” that simply switched itself off. These devices were jokes with a point: they kept ideas tangible. In an era when it is easy to worship abstractions, he reminded colleagues that insight often arrives with the smell of solder. That tactile confidence made it easier to trust the abstractions of Claude Shannon information theory, because they came from a person who knew how the world resists, slips, and breaks.

Why Claude Shannon information theory Still Shapes the Digital World

It might be tempting to treat a 1948 theory as a historical artifact, useful in the age of telegraphs but distant from cloud platforms or machine learning. The opposite is true. The deeper and more software-defined our systems become, the more we need bedrock. Data centers rely on erasure codes to survive disk failures; content delivery networks juggle rates, losses, and latencies; wireless standards bake capacity-approaching codes into their DNA; and AI training pipelines compress datasets and gradients to fit bandwidth budgets. The design vocabulary—the way engineers talk about limits and levers—flows directly from Claude Shannon information theory.

From Circuits to Silicon to AI: A Cascade of Influence

Shannon’s early marriage of Boolean algebra and relays made digital logic predictable. That predictability invited scale: transistorized circuits could be composed with confidence, then miniaturized and replicated. Once logic was scalable, computation was scalable; once computation was scalable, algorithms would chase new frontiers; once algorithms were abundant, data became a resource to be moved, stored, compressed, and protected. At every layer of that cascade, design choices depend on trade-offs formalized by Claude Shannon information theory. It is a lineage that runs from a thesis desk to the world’s largest data centers, with each step resting on the one before it.

Crossing Disciplines: Biology, Neuroscience, and the Language of Codes

Information is not only a property of radios and routers. Biologists use entropy to measure structure in DNA, to quantify transcriptional variability across cells, and to model how organisms balance fidelity with energy cost when copying genetic material. Neuroscientists analyze spike trains as messages traveling through noisy channels, asking how brains trade metabolic expense for coding efficiency. These are not metaphors; they are quantitative imports. The tools of Claude Shannon information theory guide experiment design, suggest testable hypotheses, and anchor claims in numbers rather than adjectives.

The Philosophy of Limits—and the Freedom It Creates

Engineers are often accused of loving constraints, and with good reason: constraints clarify. Shannon’s capacity theorems do not dampen ambition; they aim it. When you know the speed limit of a channel, you stop chasing fantasies and start inventing systems that cruise near that limit with elegance and safety. The practical freedom that flows from limits is one of the quiet gifts of Claude Shannon information theory. Instead of wishful thinking, you get informed creativity: codes, modulations, protocols, and architectures that acknowledge physics and still deliver delight.

Lessons for Builders, Researchers, and Teachers

Shannon’s career offers a playbook that remains fresh. Define the problem precisely; distinguish what matters from what distracts; turn messy realities into crisp abstractions; separate concerns so teams can optimize independently; and then build prototypes that pressure-test the theory. Teachers can borrow his clarity to help students see the backbone before the nerves; researchers can borrow his humility to pursue depth instead of spectacle; and builders can borrow his playfulness to keep curiosity alive. The spirit that animates Claude Shannon information theory is not austere. It smiles, tinkers, and trusts that the world will yield if you ask it the right questions in the right language.

Case Study: How Streaming Video Survives Congestion

Consider a streaming service during a live sports event. Millions of viewers join at once, congesting networks and stressing servers. The encoder must compress fast-changing scenes without turning motion into mush; the transport must adapt rates as bandwidth hiccups; error-correction must heal packet losses without ballooning latency; and content delivery networks must place the right chunks of video near the right clusters of viewers. Each component is a negotiation with limits. Entropy models determine how much a scene can be compressed; capacity estimates govern rate adaptation; codes and interleavers mitigate losses; and edge caching smooths demand spikes. From end to end, the system is a clinic in applied consequence: the theorems of information theory turned into the comfort of a smooth play button.

Case Study: Robust Cloud Storage at Planetary Scale

Storing data across thousands of disks invites a grim arithmetic: some hardware will fail every day. To keep user data durable without paying an infinite replication tax, cloud providers use erasure codes. These codes cut files into slices and mix them into parity blocks such that any modest subset suffices to reconstruct the whole. The trick is choosing code rates and repair strategies that minimize bandwidth and rebuild time while meeting durability targets. That design exercise lives squarely inside the framework of uncertainty, redundancy, and rate—precisely the territory mapped by Claude Shannon’s ideas.

Research Threads: What Came After the Foundations

An entire research landscape unfurled from Shannon’s proofs: channel coding theory (from Hamming and Reed–Solomon to turbo and LDPC codes), source coding (from Huffman and arithmetic coding to modern neural compression), network information theory (multicast, interference alignment, and beyond), and statistical learning connections that study generalization through information measures. While the mathematics has grown more elaborate, the compass still points the same direction: measure uncertainty faithfully; spend redundancy wisely; respect capacity; and build systems that decompose cleanly. The skeleton is classic; the muscles are modern.

Character, Style, and the Craft of Writing Clearly

Read Shannon’s papers and you find a surprising absence of fanfare. Theorems arrive in calm prose, proofs are lean, and examples are surgical. This lucidity did cultural work: it made the field welcoming. Students could follow the argument without feeling scolded; practitioners could translate results into design choices without a second language. In an era drowning in jargon, the tone itself is a lesson in engineering ethics: write so the idea survives.

Trusted Gateways to the Shannon Legacy

Readers who want a compact overview can start with the Encyclopaedia Britannica biography of Claude Shannon, which balances life and work with editorial care. For a document-rich, engineering-focused account, the IEEE’s Engineering and Technology History Wiki profile collects primary sources, context, and photos from the Bell Labs era. These trustworthy resources complement the narrative here and help frame the reception and evolution of his ideas within the broader history of communications and computing.

Public Imagination: Why the Story Still Charms

Part of the enduring appeal is tonal: the combination of deep seriousness and whimsical play. You can picture him riding a unicycle past a chalkboard dense with proofs, then disappearing into a workshop full of gears and bicycle chains. The message is not that genius requires eccentricity; it is that permission to play is not a distraction from insight. Many of his devices embodied metaphors—learning a maze, juggling probabilities, toggling a switch—that mirror the abstractions at the heart of his theory.

Teaching the Next Generation

If you teach engineering or computer science, Shannon offers a template for curriculum design. Start with crisp distinctions: source versus channel, rate versus reliability, bandwidth versus power. Add the concept of capacity early to set expectations; demonstrate separation theorems so students learn to decompose problems; and then build labs where students compress real signals and recover them through noisy links. By the end, students should be able to diagnose a design like physicians: listen for symptoms, measure vital signs, and choose treatments—compression, coding, modulation—that respect the patient’s physiology. In doing so they internalize the practice that Claude Shannon information theory first made possible.

Ethics and Reliability in a Connected World

Shannon’s framework is value-neutral but not impact-neutral. When information moves faster and more reliably, societies reorganize. That shift invites responsibility: securing communications against eavesdropping, safeguarding privacy when compressed data still leaks patterns, and designing protocols that degrade gracefully under stress. The ethics of infrastructure start with honest accounting of limits and risks—a habit learned from the same school that taught engineers to reason carefully about noise, redundancy, and failure.

Long-Horizon View: What Remains Hard

Some frontiers still resist easy answers. Distributed systems must trade consistency against availability under partition; wireless networks juggle interference in dense, heterogeneous environments; and multimodal data challenge today’s codecs with correlations that span space, time, and semantics. In each case, the winning strategies look familiar: build layered architectures; let modules specialize; and defend the signal against the universe’s talent for chaos. If the last seventy-five years are a guide, tomorrow’s breakthroughs will echo yesterday’s: new embodiments, same compass.

Conclusion: A Quiet Revolution That Never Ended

Claude Shannon did more than solve an engineering puzzle; he taught us how to think about information with a clarity that endures. Messages became choices among possibilities. Uncertainty became a quantity you can compute. Noise turned from a demon into a parameter. And the dream of reliable communication at scale became a design discipline with levers and limits. The devices in our pockets, the fiber beneath our streets, and the servers that knit the world together are living proof that the mathematics was right. The invitation, for anyone who builds or studies systems today, is to keep the spirit alive: define things cleanly, separate what can be separated, measure what matters, and approach the horizon with grace. That is the lasting promise of Claude Shannon information theory.